The chart is one of the goofiest things I’ve ever seen. I can’t believe a professional would present something like that. What would hunting/buck populations actually looks like on the ground if the buck survival chart was the same as the one presented for does? I’d just guess you’d have a lot more happy hunters

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Montana Mule Deer Mismanagement

- Thread starter rogerthat

- Start date

kwyeewyk

Well-known member

All it shows is a 50% survival rate every year for bucks and 85% for does. I'd say he was just trying to show that it's not feasible to have "half" of all bucks be 4.5yrs or older. Would be a much more useful exercise if he used actual or predicted survival rates under different hunting scenarios.Is that depicting the survival rate for Montana's herd that is hunted throughout the rut? Or a herd that is not hunted at all?

rogerthat

Well-known member

- Joined

- Aug 29, 2015

- Messages

- 3,509

Really that’s the conversation. Whats the survival rate for current management? What’s the target survival rate?Is that depicting the survival rate for Montana's herd that is hunted throughout the rut? Or a herd that is not hunted at all?

WanderWoman

Well-known member

In Montana with hunted populations, total annual buck mortality in mountain foothill areas is 46%, timbered breaks 41%, prairie badlands 61%. So survival rates are 54%, 59%, and 39%, respectively, in OTC areas of MT.All it shows is a 50% survival rate every year for bucks and 85% for does. I'd say he was just trying to show that it's not feasible to have "half" of all bucks be 4.5yrs or older. Would be a much more useful exercise if he used actual or predicted survival rates under different hunting scenarios.

A ‘summary’ of sorts across western states would put OTC buck survival (after their yearling year) at 40-60%. So this jives too I guess. Yearling buck survival is around 50%, fawn survival varies as was mentioned in that post.

For LE areas there’s 60-70% survival (which I struggle with this as “LE” can mean 50 permits or 500 and thus affect survival rate).

Important to note that up to some level, hunting mortality substitutes for natural mortality in bucks. Similar to how predation is largely compensatory for fawns, hunting mortality is somewhat compensatory for bucks (to a certain point that varies year-to-year based on nutrition and weather).

Completely unhunted areas buck survival is around 70-80% and this could vary year to year. Given how much bucks expend energy during the rut their winter survival should vary not to the extent that fawn survival does but moreso than adult doe survival.

So using FWP’s example, take an unhunted population of 100 fawns. Roughly 50 are buck fawns. 50% fawn survival = 25 buck fawns. (I think that’s high for fawn survival and more realistically it’s closer to 30% but again, lots of variability.) Yearling buck survival is 50%, so now we have 12 two-year olds. At 80% survival for the remainder, that leaves 10 3-year olds, 8 4-year olds, and 6 5-year olds. So from a starting population of 100 fawns (not including the does and bucks already out there), 6% would survive to be 5 if their adult survival rate was 80%.

This is useful for illustrative purposes but environmental variation, luck, an individual animal’s decisions, etc. all interact to determine its survival too.

rogerthat

Well-known member

- Joined

- Aug 29, 2015

- Messages

- 3,509

Are those rules of thumb or real world actual survival rates? Does FWP know what its actual survival rates are? Does season structure changes affect buck survival rate significantly? Common sense seems that it would. Say for example conducting an October season when bucks are less vulnerable vs a November hunt when bucks are very vulnerable to harvest? This should be reflected in success rates as well.In Montana with hunted populations, total annual buck mortality in mountain foothill areas is 46%, timbered breaks 41%, prairie badlands 61%. So survival rates are 54%, 59%, and 39%, respectively, in OTC areas of MT.

A ‘summary’ of sorts across western states would put OTC buck survival (after their yearling year) at 40-60%. So this jives too I guess. Yearling buck survival is around 50%, fawn survival varies as was mentioned in that post.

For LE areas there’s 60-70% survival (which I struggle with this as “LE” can mean 50 permits or 500 and thus affect survival rate).

Important to note that up to some level, hunting mortality substitutes for natural mortality in bucks. Similar to how predation is largely compensatory for fawns, hunting mortality is somewhat compensatory for bucks (to a certain point that varies year-to-year based on nutrition and weather).

Completely unhunted areas buck survival is around 70-80% and this could vary year to year. Given how much bucks expend energy during the rut their winter survival should vary not to the extent that fawn survival does but moreso than adult doe survival.

So using FWP’s example, take an unhunted population of 100 fawns. Roughly 50 are buck fawns. 50% fawn survival = 25 buck fawns. (I think that’s high for fawn survival and more realistically it’s closer to 30% but again, lots of variability.) Yearling buck survival is 50%, so now we have 12 two-year olds. At 80% survival for the remainder, that leaves 10 3-year olds, 8 4-year olds, and 6 5-year olds. So from a starting population of 100 fawns (not including the does and bucks already out there), 6% would survive to be 5 if their adult survival rate was 80%.

This is useful for illustrative purposes but environmental variation, luck, an individual animal’s decisions, etc. all interact to determine its survival too.

WanderWoman

Well-known member

So the rates at the top are from an FWP publication “Mule Deer and Whitetailed Deer Conservation and Management in MT” or something like that. You should be able to google it or search it on FWP’s website. One of the bios sent it to me a long time ago.Are those rules of thumb or real world actual survival rates? Does FWP know what its actual survival rates are? Does season structure changes affect buck survival rate significantly? Common sense seems that it would. Say for example conducting an October season when bucks are less vulnerable vs a November hunt when bucks are very vulnerable to harvest? This should be reflected in success rates as well.

The other rates are from a friend of mine who runs IPMs for some of the other western states. It’s a general 100,000’ view but probably a conglomeration of collar data over the years.

Yeah there’s a point where depending on season-structure, harvest rates would go from compensatory to additive. And having a season when bucks are more vulnerable timing wise would have a similar effect to having a season where bucks are more vulnerable habitat-wise (land ownership, openness, road density, etc.).

It would be interesting info if available from other states that may have used to have OTC rut hunting and have switched to OTC not-rut hunting if/how harvest rates, mortality rates, and survival rates have changed. Over the long term to account for variability in weather. There’s the question of increasing hunter congestion during the first part of the season with such a switch (if nothing else changed) and to what degree that occurs.

Edited to add that the mortality rates up top are combination of harvest mortality and natural mortality, not the same as harvest success rates although it’s all related.

Last edited:

Irrelevant

Well-known member

Those are very specific results. Can you point us to that/those studies?In Montana with hunted populations, total annual buck mortality in mountain foothill areas is 46%, timbered breaks 41%, prairie badlands 61%. So survival rates are 54%, 59%, and 39%, respectively, in OTC areas of MT.

WanderWoman

Well-known member

Those are very specific results. Can you point us to that/those studies?

Stone_Ice_1

Well-known member

They die.Could somebody with a stats degree explain to me what happens when you shoot the surviving does?

Sorry, couldn't resist.

Lots of interesting info in there, and most of it is still relevant today. I would imagine the info about adult male mortality rates from hunting is outdated. Those rates must be higher today than they were in the late 80's/early 90's. Hunters are just way more efficient these days with the advances in technology.

Personally, I believe that there could be a lot of information in a study from the 80s and 90s that would be less relevant now. For example, much of what we considered winter range for mule deer then is inhabited by hundreds if not thousands of elk. The mule deer now winter above the elk in deep snow or go below the elk to the river bottoms. This has to effect survival rates.

Raptor presence is markedly changed from eagles being fairly rare in the 70s and 80s to very common now. I won't bother to speculate on whether this makes a noticeable difference in mule deer survival rates, but anyone using 40-year-old data is speculating in my opinion.

We had practically no wolves before 1995.

Grizzlies were quite rare, and we often didn't carry a gun in the mountains. We had never heard of bear spray.

Many roads and trails in the area where I live in Park County have been closed which I thought was great but game populations in the mountains have steadily declined. I am not sure that this doesn't keep people off the predators and actually have the opposite effect that I expected.

Anyway, I could go on with further speculation but will just say that some current studies would be desirable in my opinion. At some point this becomes like using vehicle collision data from the horse and buggy days to shore up wildlife management practices today. We need current data a lot worse now than we did in the 1980s. I don't see it happening.

Raptor presence is markedly changed from eagles being fairly rare in the 70s and 80s to very common now. I won't bother to speculate on whether this makes a noticeable difference in mule deer survival rates, but anyone using 40-year-old data is speculating in my opinion.

We had practically no wolves before 1995.

Grizzlies were quite rare, and we often didn't carry a gun in the mountains. We had never heard of bear spray.

Many roads and trails in the area where I live in Park County have been closed which I thought was great but game populations in the mountains have steadily declined. I am not sure that this doesn't keep people off the predators and actually have the opposite effect that I expected.

Anyway, I could go on with further speculation but will just say that some current studies would be desirable in my opinion. At some point this becomes like using vehicle collision data from the horse and buggy days to shore up wildlife management practices today. We need current data a lot worse now than we did in the 1980s. I don't see it happening.

Irrelevant

Well-known member

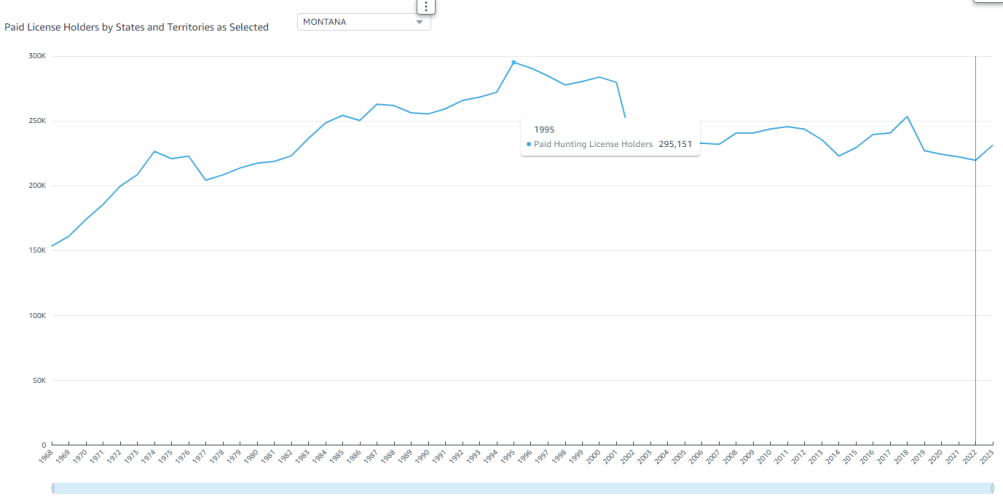

the counter could be that there are fewer hunters in MT today than then.Lots of interesting info in there, and most of it is still relevant today. I would imagine the info about adult male mortality rates from hunting is outdated. Those rates must be higher today than they were in the late 80's/early 90's. Hunters are just way more efficient these days with the advances in technology.

That could be true, but even if there were more hunters back then, I bet hunter densities on public land are much higher now. Hunters today have better technology and for the most part also hunt smarter. It wasn’t uncommon in the 80s and 90’s for hunters in eastern MT to drive out on points and shoot at deer as they ran out of coulees. I know this still happens today, but I don’t think it’s as common as it used to be. Now when those deer run out to 500 yards to stop and look back, they are in range with a lot of the rifles people are using today.

Roscoe P Coal

Active member

- Joined

- May 29, 2023

- Messages

- 56

antlerradar

Well-known member

I would really like to know how many times the two bucks you posted up have been passed over by other hunters looking for a buck with more desirable antlers.One of the crew just send me a photo of the teeth on a buck they took on Friday. Hardly anything for incisors or molars. Crazy that the thing could eat anything. Was soon going to need IV food with no teeth to browse or chew anything.

On private land deer like this will live and die of old age, Looks like they can get some age on public land too.

Bucks like these two make me question if the long rut season is the best way to keep bucks from getting old.

Last edited:

SAJ-99

Well-known member

This is great info. Thanks for sharing. Last winter showed us there is considerable variability in the survival rates. I saw some data from Wyoming range where 100% of collared fawns died, 70% of bucks, 60% of does. My main criticism of MT FWP is that the AHM isn't used in a predictive way. The model is changed only after getting new data that says things went into the tank. For example, if you know that 2021 was a bad drought year and you know deer are going into winter with low body fat and you know the will affect 2022 fawn numbers, why the hell give out 11,000 Region 7 B tags? I guess the argument could be "It's compensatory"?So the rates at the top are from an FWP publication “Mule Deer and Whitetailed Deer Conservation and Management in MT” or something like that. You should be able to google it or search it on FWP’s website. One of the bios sent it to me a long time ago.

The other rates are from a friend of mine who runs IPMs for some of the other western states. It’s a general 100,000’ view but probably a conglomeration of collar data over the years.

Yeah there’s a point where depending on season-structure, harvest rates would go from compensatory to additive. And having a season when bucks are more vulnerable timing wise would have a similar effect to having a season where bucks are more vulnerable habitat-wise (land ownership, openness, road density, etc.).

It would be interesting info if available from other states that may have used to have OTC rut hunting and have switched to OTC not-rut hunting if/how harvest rates, mortality rates, and survival rates have changed. Over the long term to account for variability in weather. There’s the question of increasing hunter congestion during the first part of the season with such a switch (if nothing else changed) and to what degree that occurs.

Edited to add that the mortality rates up top are combination of harvest mortality and natural mortality, not the same as harvest success rates although it’s all related.

antlerradar

Well-known member

To be fair, I don't think that they gave out 11,000 tags in 22. Your point is a good one though, wasn't hard to see this crash coming.This is great info. Thanks for sharing. Last winter showed us there is considerable variability in the survival rates. I saw some data from Wyoming range where 100% of collared fawns died, 70% of bucks, 60% of does. My main criticism of MT FWP is that the AHM isn't used in a predictive way. The model is changed only after getting new data that says things went into the tank. For example, if you know that 2021 was a bad drought year and you know deer are going into winter with low body fat and you know the will affect 2022 fawn numbers, why the hell give out 11,000 Region 7 B tags? I guess the argument could be "It's compensatory"?

SAJ-99

Well-known member

They did in 2021, which was when the drought hit. The fawns would have been born in 2022. Sorry, I could have been more clear.To be fair, I don't think that they gave out 11,000 tags in 22. Your point is a good one though, wasn't hard to see this crash coming.

Look it is probably legit from a biological point of view to say a reduction of the herd would have been compensatory and probably helped the survivors, but this is where messaging gets messy. Most hunters are not going to agree with cutting a ton of does even though food is scarce. Especially given it will tank deer hunting for a few years forward.

Similar threads

- Replies

- 602

- Views

- 28K